AI summary

The article discusses a challenge faced by a client during Google’s algorithm updates, where their anti-hotlink system inadvertently blocked Googlebot due to its high crawl frequency. This led to issues such as delayed indexing and decreased organic traffic. To address this, a solution was developed that included whitelisting Googlebot, exempting it from rate limits, and implementing platform-wide configurability for better bot management. The results included improved indexing, increased organic traffic, and enhanced security measures without compromising anti-bot protections. This solution not only benefited the initial client but was also adopted across the platform, providing broader advantages for other users.

In response to unexpected SEO traffic loss caused by misidentified crawl activity, we built a custom Googlebot exemption mechanism into our CDN. The result: full indexing coverage, zero compromise in bot protection, and a 34% increase in organic traffic.

Industry

Funtech

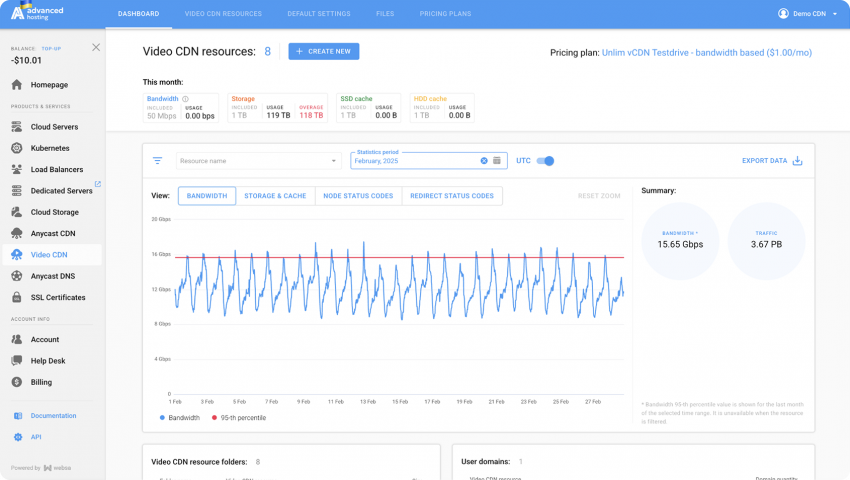

Our client is a mid-size Video Streaming platform. It operates in a content-heavy, high-traffic environment where SEO performance directly impacts growth. Their infrastructure includes a security layer with advanced anti-bot protections – optimized for detecting and blocking malicious actors like scrapers, DDoS attackers, and credential stuffers.

Challenge

During Google’s algorithm updates, Googlebot often ramps up crawl activity to re-index affected sites. Our client’s anti-hotlink system was tuned to block requests exceeding a fixed rate per IP, a rule that typically stops botnet traffic and abuse.

However, this system did not distinguish between Googlebot and harmful bots. When Googlebot’s frequency exceeded the threshold, the crawler was blocked or throttled, resulting in:

- Delayed or partial indexing

- Reduced search visibility

- A sudden drop in organic traffic

Since Google does not announce crawl-rate changes ahead of time, when the server saw an unexpected increase in bot traffic, it treated it as an attack.

Elevate performance and protect your visibility with AH’s CDN

Solution

After analyzing the clients’ issue, we decided to develop smart exemptions and rule tuning features to restore healthy indexing for the client. We designed and implemented a targeted functionality within the CDN that allowed Googlebot to bypass rate limits while preserving strong anti-bot protections elsewhere.

Our approach included:

1. Googlebot whitelisting logic

We engineered a verification mechanism that allows us to reliably identify Googlebot traffic – accurately distinguishing genuine crawlers from impersonators.

2. Exemption from rate-limiting

Once verified, Googlebot was granted a “trusted crawler” status. This bypassed all frequency thresholds and IP-based blocking rules, allowing it to crawl freely regardless of volume.

3. Platform-wide configurability

We extended this solution beyond a single client, transforming a specific issue into a platform-wide enhancement. We began by analyzing the client’s problem and manually adjusting settings to ensure legitimate crawlers were properly recognized. Then, we updated the protection functionality across the entire platform and added a toggle for Googlebot (and other known crawlers) in all our clients’ dashboards. Since then, the feature enables our CDN users to balance tight security and search visibility in a controlled, predictable manner.

Explore our custom CDN that evolves with your needs

Results

The initial implementation resolved the client’s indexing delays and resulted in a significant increase in organic traffic. Googlebot resumed high-frequency crawling without triggering false positives.

Following this success, we rolled out the solution across our broader client base, leading to several key outcomes:

- 100% indexing coverage during subsequent Google updates

- Sizeable SEO traffic gains

- No compromise in anti-DDoS or malicious bot detection

- Platform-wide adoption of trusted bot exemptions

- Improved crawl health scores across multiple ecosystems

Clients appreciated the ability to fine-tune bot access directly in their CDN settings. For high-traffic, SEO-sensitive businesses, this added control meant:

- Consistent content discovery

- Lower SEO risk during Google rollouts

- Faster reaction time to indexing anomalies

What began as an emergency fix became a long-term feature. This not only restored search visibility for one client, but helped others facing the same challenge during algorithm updates.

Fast content delivery and custom features on demand. See how AH’s CDN helps you optimize performance.